In The Matrix Reloaded, Morpheus, after his ship the Nebuchadnezzar is sunk, makes a biblical reference: I have dreamed a dream, but now the dream is gone from me. The line became a shorthand for the disappointment of hardcore Matrix fans who watched the pathbreaking original dissolve into bubble-gum pulp fiction sequels. It is also a sentiment shared by those who have spent years waiting for the arrival of the deus ex machina of Artificial General Intelligence.Cinema trained us to expect Agent Smith or the Terminator. What we got instead were malfunctioning interns that forget their brief after three prompts, which is not entirely unlike regular interns. If there is one area where artificial intelligence has genuinely altered daily life, for better or worse, it is generative AI.

Prophets in the Wilderness

A decade and a half ago, people working seriously on neural networks were dismissed as prophets in the wilderness. One of them was Professor Geoffrey Hinton, whose research group used NVIDIA’s CUDA platform to recognise human speech. Hinton encouraged his students to experiment with GPUs. One of them, Alex Krizhevsky, along with Ilya Sutskever, trained a visual neural network using two consumer-grade NVIDIA graphics cards bought online. Running them from Krizhevsky’s parents’ house, and racking up a sizeable electricity bill, they trained the model on millions of images in a week, achieving results that rivalled Google’s efforts using tens of thousands of CPUs.That moment reshaped the industry. If neural networks could see, what else could they learn? The answer, as Jensen Huang would later discover, was nearly everything.When ChatGPT launched and it became clear that OpenAI’s models were running on NVIDIA’s chips, market perception around the company shifted dramatically. Valuations soared. The rest, as they say, is history.Hinton would go on to share the Nobel Prize in Physics in 2024. Huang emerged as the arms dealer of the AI race, building a company where vast numbers of employees became dollar millionaires. For laypeople, that was the true arrival of generative AI, and for capitalists, it promised something intoxicating: companies that scale without hiring, produce without friction, and grow without payroll.

The AI Dream

AI does not need smoke breaks. It does not badmouth its boss, unless the boss is Elon Musk. It does not require me-time. Yet the promised productivity miracle, the idea that AI would replace workers by making individuals superhumanly efficient, has mostly fizzled. In practice, it has flooded offices with AI slop, rendering LinkedIn posts and internal emails nearly unreadable.The disappointment was captured neatly in a viral tweet: I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes.Like most proclamations from the platform formerly known as Twitter, this was an exaggeration. Generative AI has undeniably made certain tasks easier. Research is faster. Summaries are cleaner. Editing copy is less painful. For writers, it offers something rare: an unbiased copy editor that does not inject its own ideology into the text. And even if large language models never write great literature, they produced something unmistakable this year: genuinely good images.

Designer of the Year

With the right prompts, the prompt engineer briefly became an amalgamation of Vincent van Gogh, Salvador Dalí, and Bill Watterson. While Time magazine crowned “AI Architects” as its Person of the Year, we believe Artificial Intelligence quietly earned another title: Designer of the Year.For a long time, AI images were the purest form of slop. They were instantly recognisable. Wax-like faces. Mangled fingers. Text that looked as if it had been written by a drunk monk fleeing Titivillus, the medieval patron demon of scribes. They were offered as proof that AI could not compete with even a doodling toddler and would make users want to And then, over the course of a year, everything changed.The improvement did not arrive through existential angst, but through engineering. Early image models like DALL-E 2 or the first versions of Stable Diffusion were diffusion systems loosely guided by text. They began with noise and slowly guessed their way toward an image, like asking a severely myopic person to glance at The Starry Night and recreate it from memory. The result often inspired a desire to cut off an ear in frustration. Text and image lived in separate systems, producing high-resolution hallucinations rather than understanding.That changed when image generation stopped being a side project and became fully integrated into multimodal models. OpenAI folded images into GPT-4o. Google followed with Gemini’s image systems, informally nicknamed Nano Banana. Stability AI rebuilt its stack with Stable Diffusion 3. Midjourney quietly re-engineered its later models along similar lines.These systems stopped drawing objects and started constructing scenes.They learned that light comes from somewhere. That shadows obey rules. That faces remain faces across time. That objects occupy space consistently. Most importantly, they learned memory. Earlier generators forgot everything between prompts. Ask for the same character twice and you got two strangers. Ask for a small edit and the entire image panicked.In 2025, that stopped.Characters persist. Colour palettes hold. Layouts remain intact. You can remove a background without altering a face. You can add text without destroying composition. Image generation ceased being a slot machine and became a tool. For the first time, the machine could explain the logic behind what it was producing.

The Ghibli Craze

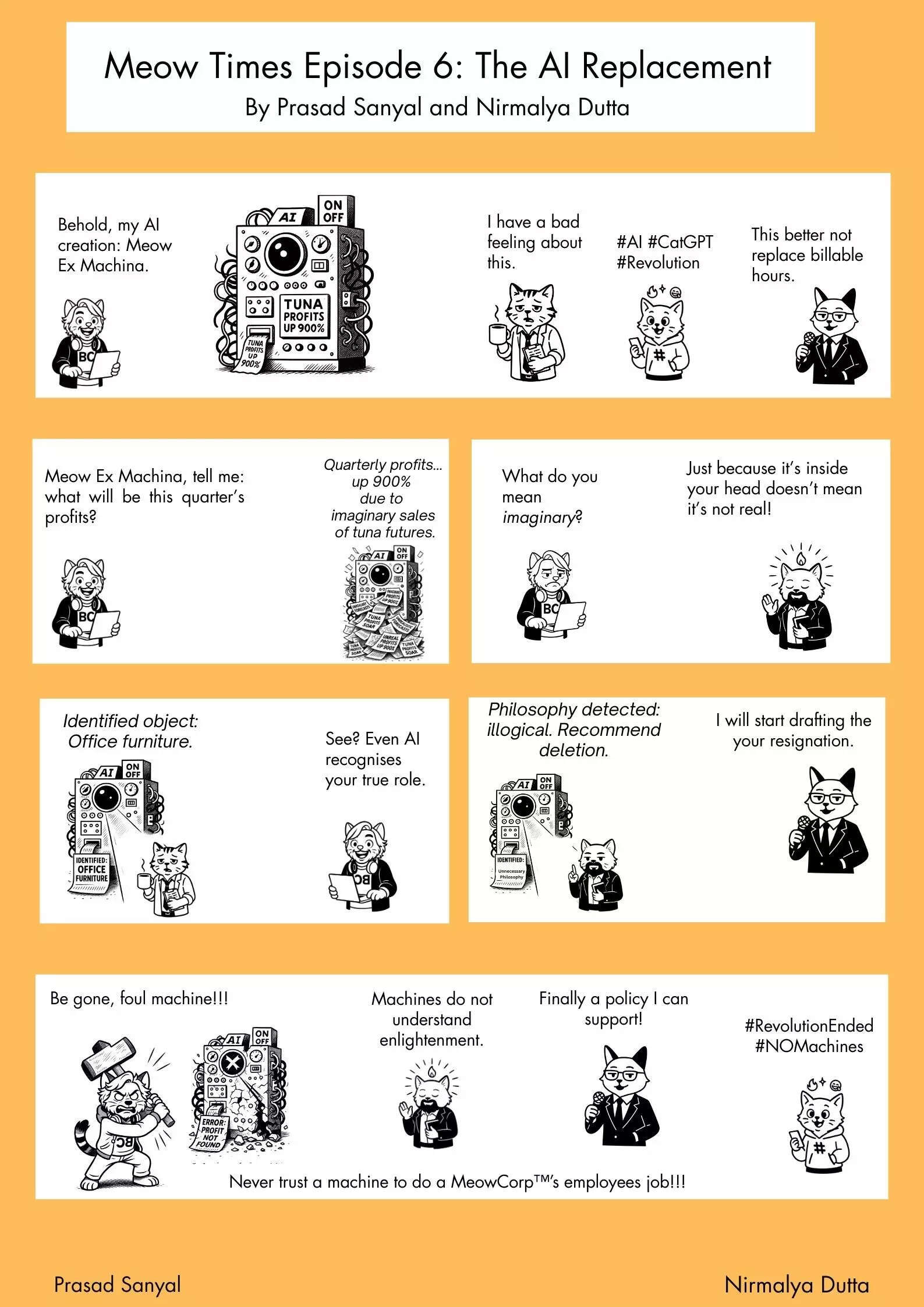

For most users, this shift arrived disguised as the Studio Ghibli craze. People turned themselves into softer, cuter versions of reality, briefly threatening to boil the planet with GPU demand. Like many great technologies, it began as novelty. Then something else emerged.Family photographs, pets, childhood streets were transformed into scenes that felt emotionally correct. Not parody. Not kitsch. Convincing homage. Lighting made sense. Mood held. One image went viral, then thousands followed, because that is how internet culture works.The deeper shift, however, appeared outside art.Once models learned layout and consistency, infographics exploded. So did diagrams, explainers, cartoons, presentations. These are not artistic challenges. They are attention problems. They depend on hierarchy, clarity, and flow.Here, Google held an unfair advantage. It has spent decades studying how humans look at screens. That accumulated knowledge flowed directly into its image models. Charts became legible. Labels landed where the eye expected them. White space acquired intention. AI visuals stopped being decorative and started communicating.Cartoons improved for the same reason. Early AI cartoons were uncanny because they were too polite, too smooth, like humour approved by HR. Once exaggeration became a choice rather than an error, caricature began to work. Faces stretched where they should. Minimalism stopped looking unfinished.

Deus Artifex

All of which means we remain far from the promised deus ex machina, an omniscient intelligence descending from the heavens to answer every question. What we received instead was something else entirely: a deus artifex. A god who builds.A system that understands composition better than most humans. That respects constraints. That remembers state. That produces competent results instantly. Not inspired. Not obsessive. Just relentlessly adequate.That is why Artificial Intelligence deserves Designer of the Year. Not because it is creative, and not because it is sentient, but because it has collapsed the cost of visual competence. It has erased apprenticeship. Bad drafts. The humiliation of being visibly terrible before becoming good.Creation did not die. It changed shape. It became selection, curation, optimisation.The cost is not the death of art. Art survives worse things than algorithms. The cost is subtler. When the easiest path to beauty becomes the most travelled one, beauty converges. Aesthetic flattening is not a bug. It is an outcome.Van Gogh did not paint sunflowers because sunflowers were trending. Dalí did not melt clocks because surrealism performed well. Their styles were not filters. They were necessities.The machine can reproduce the surface of that necessity flawlessly. It cannot feel the need behind it.We did not get god.We did not get the devil.We got a better craftsman. So perhaps Morpheus was wrong. The dream that he dreamed wasn’t taken away. It just changed form.